Our Position

The Bail Project opposes the use of pretrial algorithms to help decide whether an individual is incarcerated pretrial. Together, with data scientists and legal scholars, we argue that pretrial algorithms, also known as risk assessment tools, disproportionately harm people of color, oversimplify complex individual and case outcomes, undermine standards of procedural justice, and rely on data that is inaccurate, outdated, and unreliable.

What are Pretrial Algorithms?

Pretrial Algorithms (risk assessments) are statistical models designed to help judges make release decisions by predicting both the likelihood of someone failing to appear at a future court appearance and/or a person’s likelihood of being arrested if they are released from jail before trial. Like the algorithms that produce credit scores or determine the ads you see on social media, these tools make predictions about an individual based on group data. The resulting scores are typically represented as high-, medium-, and low-risk categories and are delivered to judges for their consideration at someone’s first court appearance. These scores are sometimes accompanied by release recommendations (i.e., release on recognizance, release with conditions, or remand/detain) for a judge’s consideration. Pretrial algorithms are in use in at least 60 jurisdictions nationally, with approximately 25% of the U.S. population living in a county or state where they would be subject to them if they were arrested.

Pretrial algorithms cannot predict what they say – they are inexact in their measurement of flight and public safety risk.

In their current state, when assessing risk of flight, pretrial algorithms try to answer the question: “What is the probability that someone will not appear at any future court appearance?” However, this is the wrong question for the court to consider because one missed court appearance is not representative of “flight.” Currently, pretrial algorithms incorporate data that considers all instances of non-appearance, which include such mundane things as having a medical emergency, needing to care for a child, not having the day off from work, or simply forgetting. This creates a net-widening effect and ensures that pretrial algorithms cannot predict what most people assume they do – the rare and exceptional instances when a person deliberately leaves the county, state or country or goes into hiding.

To accurately assess flight, pretrial algorithms would have to answer the following question: “What is the probability that this person will intentionally evade prosecution?” However, this is a question that cannot be answered with statistical analysis based on group data.

Similarly, when assessing public safety risk, the relevant question is: “What is the probability that a person will commit a crime that results in harm to another person or persons while released pretrial?” As with flight risk, pretrial algorithms cannot answer this question. Instead, they create a second net-widening effect because their calculations depend on generalized data about criminal justice involvement, including technical parole violations and addiction- and poverty-related arrests. The calculation also factors in both arrests and convictions, even though a large proportion of arrests are ultimately dismissed.

Pretrial algorithms depend on group data that is riddled with racial disparities. As a result, their recommendations are racially biased and disproportionately harm communities of color.

Pretrial algorithms rely on historical data to make present-day predictions. This data, however, is not an objective representation of criminal activity. There is overwhelming evidence that racial disparities exist at every stage of the criminal justice process. Across this country, communities of color experience higher levels of police enforcement and surveillance activity than their white counterparts, which increases the likelihood and rate of arrest for people living in these communities of color, independent of whether more criminal activity actually exists there. These disparities flow throughout the criminal legal system – from bail setting and charging practices to conviction rates. As a result, people of color are vastly over-represented in our country’s jails and prisons, and more likely to have criminal records, which then put them at a disadvantage when processed through a pretrial algorithm.

Pretrial algorithms do not account or control for this reality. Instead, they reinforce racial disparities by obscuring this important context under a veneer of objectivity. Pretrial algorithms themselves produce overtly racist results because they are prone to the production of false positives – instances where someone is deemed a high risk, but they do not act as predicted. These false positives occur more frequently with Black people than white people.1Angwin, J., Larson, J., Mattu, S. (2016). Machine Bias. There’s Software Used Across the Country to Predict Future Criminals. And it’s Biased Against Blacks. ProPublica. /mfn] As a result of this bias, Black people who are not actually a risk for new arrests are more likely to receive more restrictive release recommendations from these tools, and are incarcerated at higher rates, simply because they are Black. This same bias does not exist with white people, who are more likely to receive lenient release recommendations, simply because they are white.

Pretrial algorithms oversimplify complex individual and case outcomes.

Pretrial algorithms are blunt instruments that erase the nuance in a person’s life and ignore the many different circumstances that people encounter pretrial. The outputs they produce do not distinguish between a non-appearance that occurs because someone fled the county and a non-appearance that occurs because someone had a medical emergency or couldn’t arrange childcare. Consider the differences here – they refer to intent (i.e., whether someone purposefully missed a court appearance). Pretrial algorithms do not and cannot determine whether the behavior they predict is intentional or not.

Pretrial algorithms also do not try to differentiate among someone arrested and charged with a technical violation pretrial (e.g., failing a drug test, missing curfew), someone who is arrested and charged with loitering because they are unhoused, someone charged with stealing because they are struggling with poverty, or someone who intentionally seeks to harm someone through a robbery or domestic violence – these are vastly different circumstances motivating very different people, yet pretrial algorithms treat them as a single, monolithic group. Pretrial algorithms are problematic because they disregard the complexity of individual life circumstances, remove context altogether, and treat vastly different circumstances as the same, which goes against the principles of individualized justice that our criminal legal system is predicated upon.

| Outcome | Outcome Severity | How it is recorded in a pretrial algorithm |

| Client missed court date because of medical emergency | Mild | Non-appearance |

| Client missed court date because they forgot | ||

| Client missed court date because they fled country | Severe | |

| Client missed court date because they went into hiding | ||

| Client re-arrested because they are unhoused and charged with loitering for sleeping in a park | Mild | New criminal arrest |

| Client re-arrested because they were on parole and violated their curfew | ||

| Client re-arrested because they were charged with murder | Severe | |

| Client re-arrested because they were charged with sex trafficking of a minor |

By offering a quick risk score on each individual based on an algorithm, judges can sidestep the difficult and sometimes time-consuming work required to prove that there is actually any evidence beyond mere speculation that a person will evade prosecution or willfully harm another person. The existence of pretrial algorithms offers time-strapped judges too much temptation to avoid substantive hearings that require the presentation of evidence to support that risk. It denies defense counsel an opportunity to protect their clients from gross speculations, at the cost of justice to the individual – the increased probability that someone legally presumed innocent will be prematurely and unfairly deprived of their liberty.

The data used to develop pretrial algorithms are often inaccurate, outdated, and unreliable.

Pretrial algorithms cannot keep pace with changes in the criminal legal system. Their estimates and predictions are based on often outdated information which has real-life negative consequences for people. The appointment of a new police chief, for example, who modifies law enforcement practices can completely upend the predictive ability of the tool. If prosecutors charge more aggressively or there are more police on the street, the predictive ability of the tool is again weakened. If a behavior that was previously criminalized, like marijuana possession, is decriminalized or legalized, old convictions for it will continue to factor into the risk assessment tool even though the underlying behavior is no longer considered criminal activity.

There’s a saying that statisticians use for bad data science like this: “Garbage in, garbage out.” With such problematic data feeding these algorithms, we can’t be confident that their outcomes can be trusted at all.

Conclusion

Pretrial algorithms are wholly inadequate and not appropriate for use when judges and judicial officers make pretrial decisions. Their failure to account for structural bias that impacts communities of color, their predictive inaccuracy, and the incentive they create for shortcuts in the administration of justice create too many liabilities for them to be applied safely to a question as critical as a person’s individual liberty. The Bail Project envisions a reformed pretrial system that balances respect for the due process rights of an individual with the need to protect public safety and the integrity of the legal process.

The current system, which relies heavily on pretrial algorithms, does not address how to effectively avoid flight or protect the public. It does not provide guidance about what the legal system can do or provide to ensure the person appearing before it returns to court and does not hurt someone while in the community. When we talk about “public safety” we must also consider the safety of those who are incarcerated and are prone to the violence and trauma that are common within custodial settings. Judges should keep in mind the balance between trying to predict the unknowable (i.e., harm that could be committed once released) with the known harm of jailing someone.

Further, to ensure that people return to court, we need to focus on supports that address common obstacles and unmet needs, such as transportation, treatment, and housing. To protect public safety, we must conduct individualized hearings that take into account the details of the accused person’s life circumstances and weigh real evidence, not flawed data.

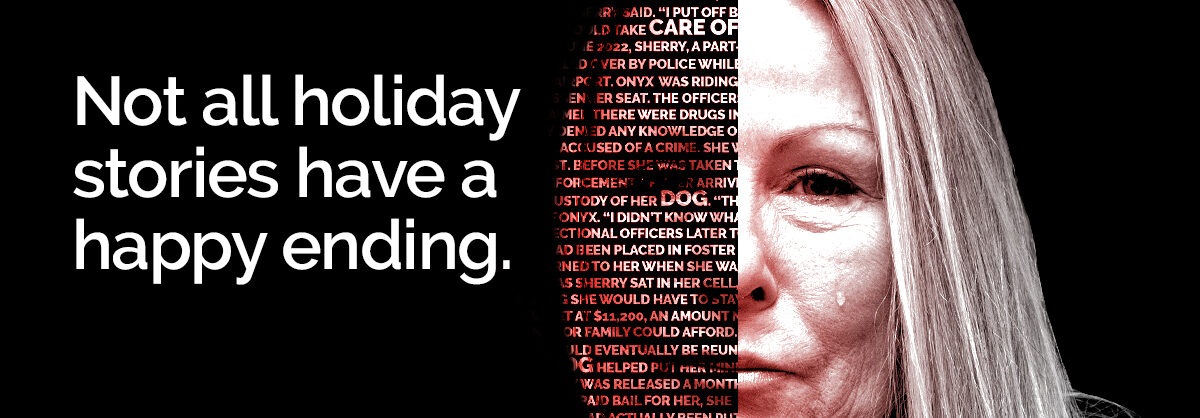

Thank you for your valuable attention. The urgency and complication of the cash bail crisis requires meaningful participation to create real change – change that is only achieved through the support of readers like you. Please consider sharing this piece with your networks and donating what you can today to sustain our vital work.