Imagine you walk into a courthouse and seated at the judge’s bench is a computer. Not someone wearing a robe, no gavel, and no human interaction, just an open laptop calculating responses, scores, and judicial decisions as you speak. This may seem hard to imagine, and don’t worry – it’s not happening yet. You may be surprised, however, at similar technology already being used in courts.

What are Pretrial Risk Assessment Tools?

Pretrial risk assessment tools (RATs) are statistical models intended to help judges make detention or release decisions by attempting to predict the likelihood that a person accused of a crime will either fail to appear at a future court appearance and/or engage in criminal activity if they are released pretrial. Like any algorithm, these risk tools attempt to predict an individual’s future behavior based on data which usually includes demographic information, such as age or employment status, housing status and prior criminal legal history. The resulting scores typically categorize a person as high-, medium-, and low-risk. These scores are delivered to judges for their consideration about whether a person will be detained or released pending trial. Over 60 jurisdictions across the United States utilize a risk assessment tool in their pretrial system.

Can We Trust Them?

The problem with relying on an algorithm to predict human behavior is that it oversimplifies complex individual outcomes and unique human experiences. One primary goal of a risk assessment tool is to answer the question, “What is the probability that someone will not appear at a future court appearance, be rearrested, or threaten public safety?”

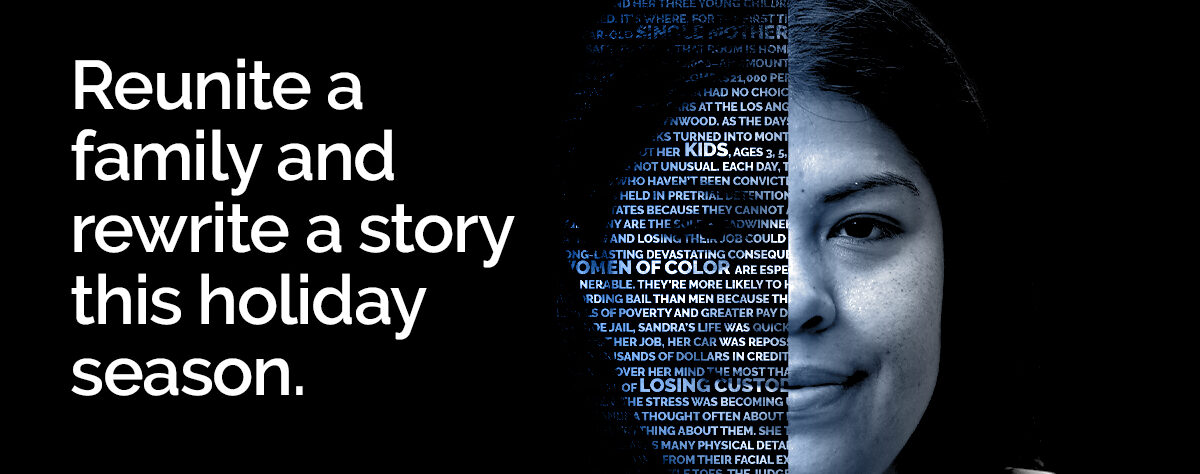

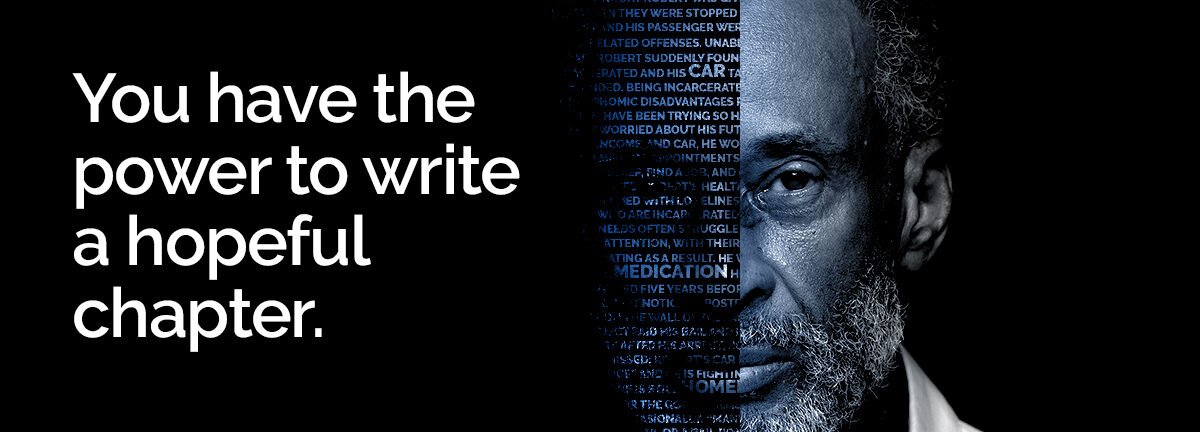

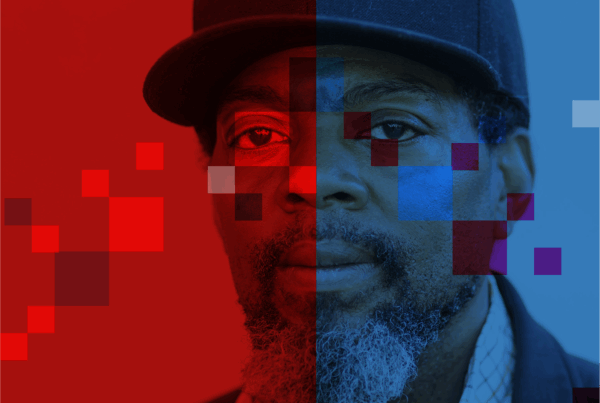

What RATs fail to do is sufficiently assess individual circumstances. They’re built on outdated, limited, and biased data that is warped because of racial disparities in policing, charging, and case outcomes which don’t accurately represent a person’s actual risk of flight or threat to public safety. These algorithms incorporate the same biases as our existing criminal justice system, many formed through decades of systemic oppression against disadvantaged communities, and particularly communities of color. Even when algorithms remove race from the risk score calculations, the discriminatory outcomes remain. One study, which examined a group of people who had been arrested, found that Black individuals were twice as likely to receive a high-risk score than otherwise similar white people.

Instead of calculating risk scores, we should be examining the root causes of crime when reforming the criminal justice system.

Reducing someone to a risk score calculated by an algorithm undermines due process and can result in people being unnecessarily jailed, rather than released and appropriately supported in community pretrial. We already know better ways to reduce the likelihood of pretrial rearrest: maintaining frequent contact through court notifications and providing access to stable jobs, housing, childcare, and mental health care, for example — all of which can prevent justice system involvement altogether. Take it from our clients who return to over 90% of their court dates without any of their own money on the line.

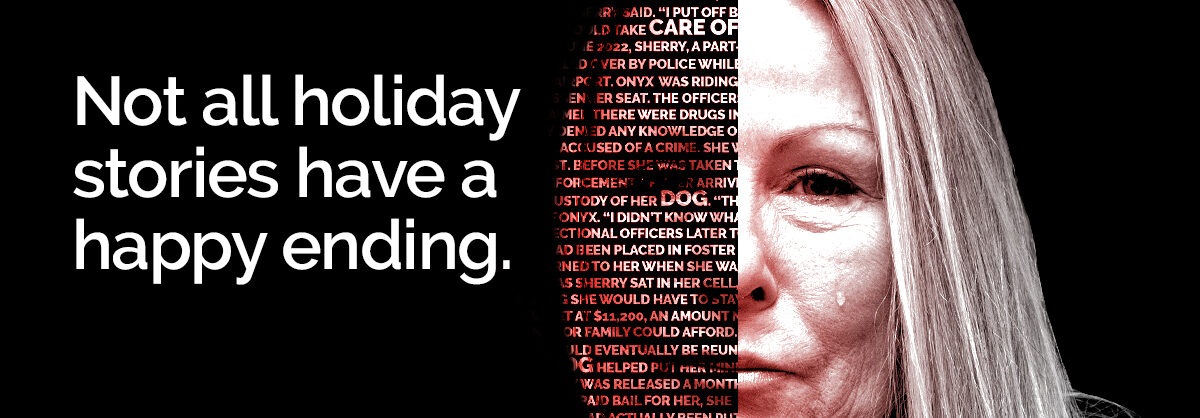

What Happens When Pretrial Risk Assessment Tools Are Wrong?

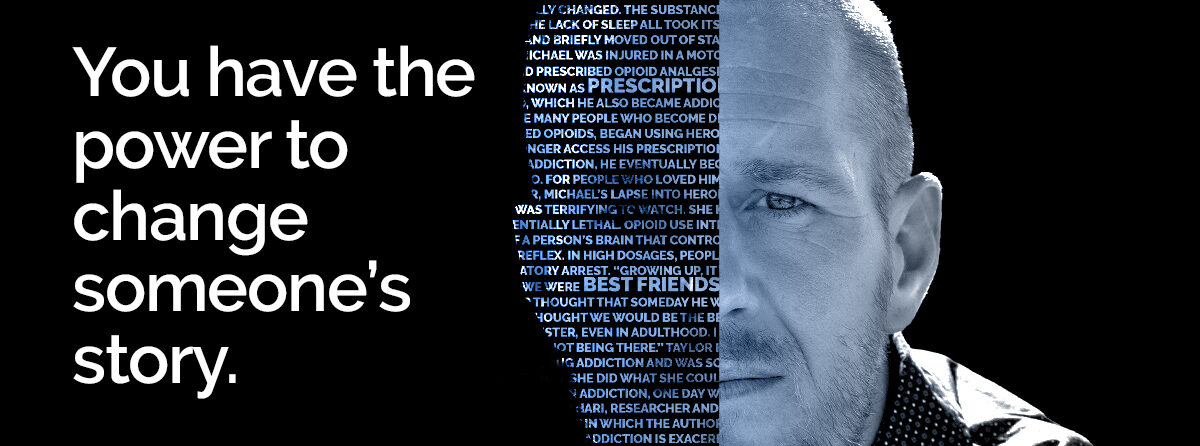

In addition to the racial disparities and inconsistencies perpetuated by risk scores generated by algorithmic tools, we’re witnessing similar problems with other AI tools. BuzzFeed was recently attacked for amplifying racial and cultural inaccuracies after publishing photos of AI-generated Barbies from around the world. Canva also received backlash after a user was repeatedly presented with renderings of Black children when prompting their AI design tool to create images of juvenile defendants wearing ankle monitors.

If the thought of AI judges makes you nervous, consider today’s realities with risk assessment tools. It should make you wonder why a system designed to evaluate and judge human behavior would implement methods that fall short of understanding the unique intricacies of human life. Why would we trust a tool more than an individualized hearing?

At The Bail Project we believe in smart, evidence-based decision-making, opting for authenticity over artificiality, and compassion over algorithms. That’s why we are critical of risk assessment tools, which lack the nuanced understanding and empathy that human judgment provides. Our Community Release with Support model prioritizes human dignity and effective support, proving that personalized care leads to real change. Together, we can prevent a future dominated by algorithms and instead build one that values human judgment and compassion.

Thank you for reading. The Bail Project is a 501(c)(3) nonprofit organization that is only able to provide direct services and sustain systems change work through donations from people like you. If you found value in this article, please consider supporting our work today.